Author: Bruce McMenomy

-

“These students nowadays…”

The November 2024 issue of The Atlantic contains an article (“The Elite College Students who Can’t Read Books”) that has been raising eyebrows and ire since. In it, Rose Horowitch notes that academics at some prestigious universities have concluded that few incoming freshmen are ready for extended reading, many admitting that they have never been required to…

-

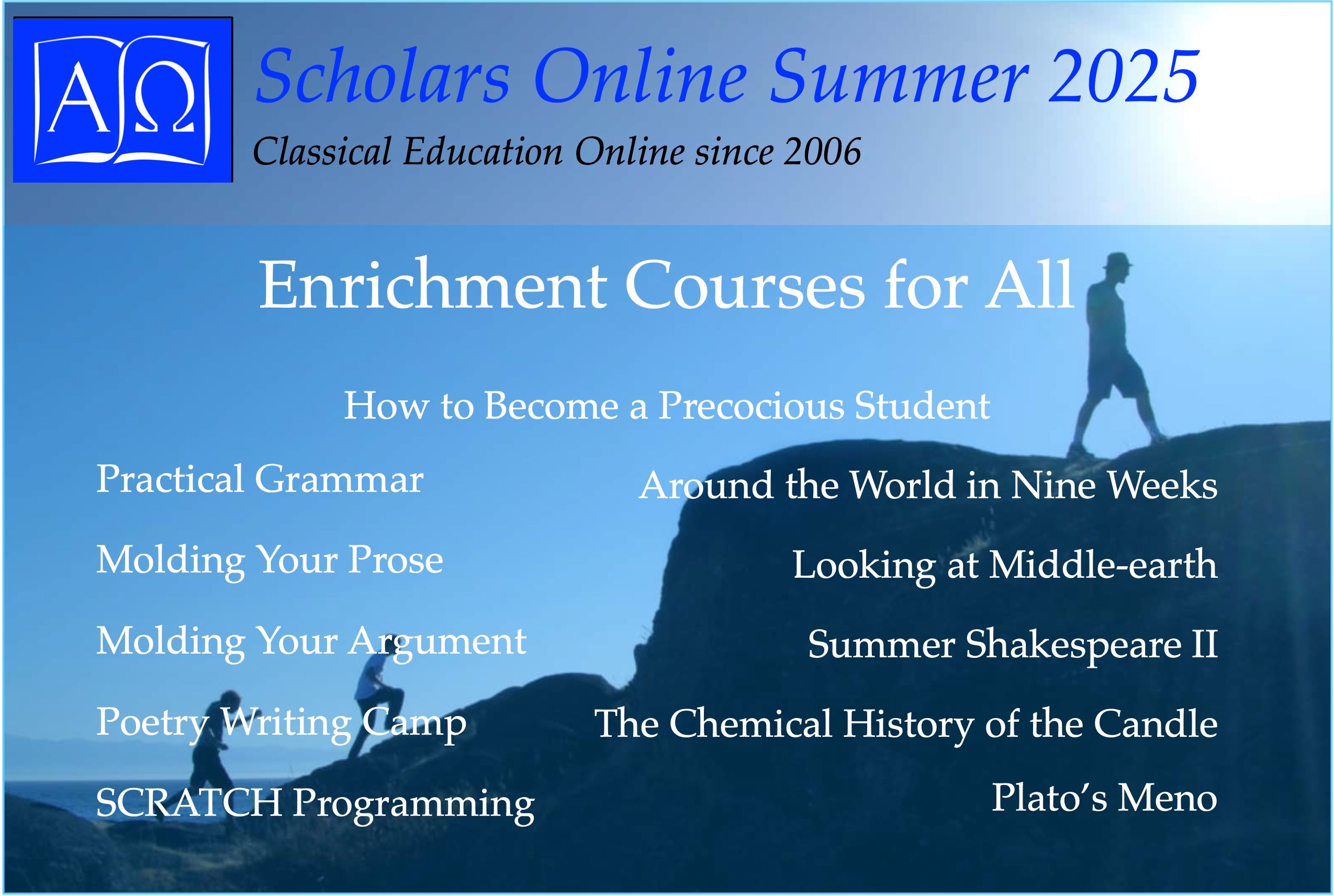

Summertime, and the Learning is Easy

The traditional school year in the United States and much of the rest of the world has been faulted for inefficiency: surely we could get more learning done, or do the same amount in less time, by simply continuing throughout the year, and not taking the summer off for what the English call the “long…

-

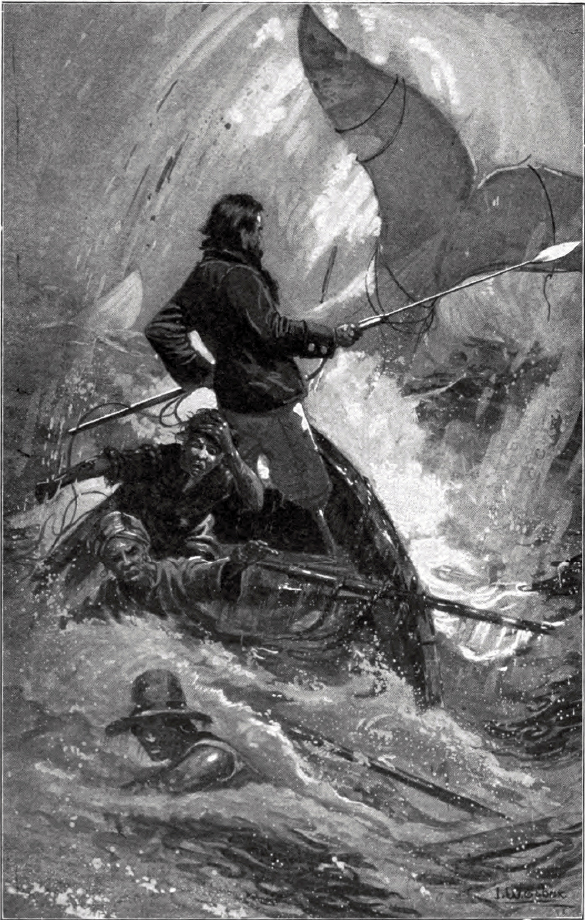

November 14: Moby-Dick

“Call me Ishmael.” Herman Melville’s Moby-Dick begins with a three-word imperative — one of the most famous openings ever written for a novel. That is it the product not of the late twentieth century, but of the mid-nineteenth, is especially remarkable. Whereas most novels of its day ease the reader into the unfolding story by stages, this…