Category: Philosophy

-

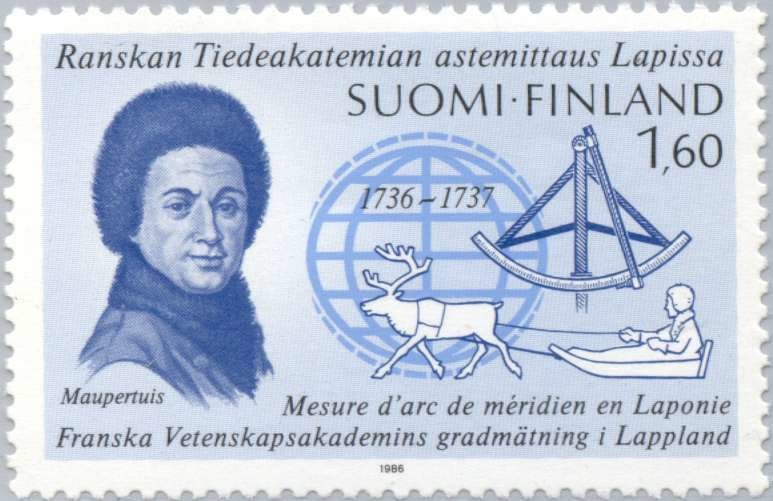

December 3: The Shape of the Earth

Anders Celsius shows up on today’s list of events, although it is neither the anniversary of his birth nor death, but of an event where he played a minor but crucial role. You may know the Celsius temperature scale from chemistry or biology, which portioned out 100 degrees between zero at water’s boiling point and…

-

December 2: Chromatius

December 2 is the feast of Chromatius, bishop of Aquileia in Italy, who died in 406. He is one of those minor early Church Fathers who don’t get a lot of press, and he shows up in only in the Roman Catholic and Eastern Orthodox Calendars, but he’s definitely worth more than a cursory glance.…

-

November 27: Clovis I

This is rather long, but bear with me. For the past month, I’ve been exploring topics inspired by the lists of events, births, or saints for the day in Wikipedia and the Britannica, trying to see whether one or another of these might help illuminate the goals, methods, or outcome of a classical Christian education.…