Category: Mathematics

-

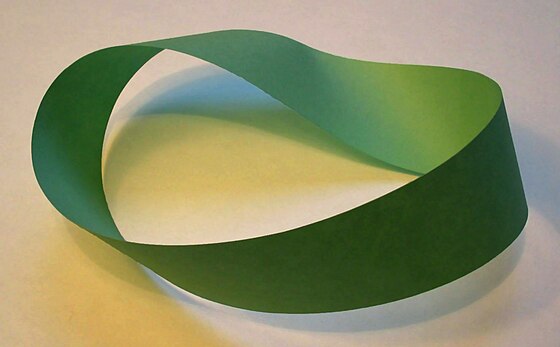

November 17: August Ferdinand Möbius

On this day in 1558, Mary Tudor, Queen of England, consort of Philip II of Spain, died at St. James Palace, and was succeeded by her half-sister, Elizabeth. In 1869, the first ships officially passed through the Suez Canal in Egypt, creating a more efficient trade route for the countries along the Indian Ocean and…

-

Scholars Ponder Top Gun

The movie Top Gun: Maverick is breaking box office records. Here are some questions that online scholars may ponder to develop their understanding of the film. Mathematics. How do the box office receipts compare to other movies? What does it mean to adjust the receipts for inflation? Physics. Which flying maneuvers generate forces on the…

-

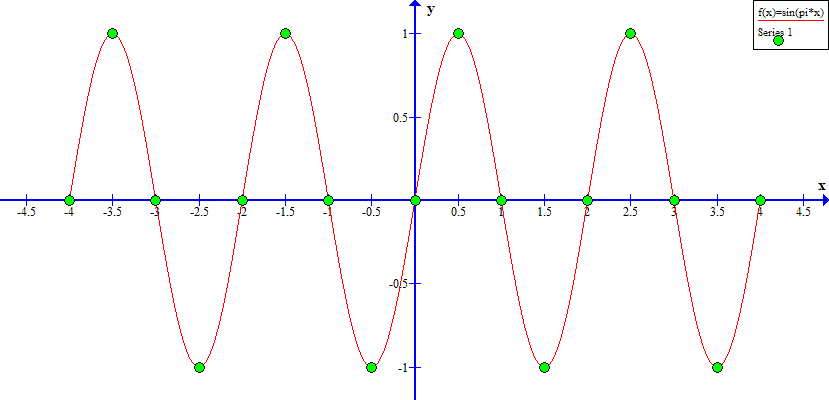

Continuous? Step-wise? What’s up with that?

You may have seen a picture of movie film. A movie film consists of a large sequence of still images, which are presented so rapidly that the viewer perceives it as motion. This is true whether the pictures are photographic images of something with physical existence, or whether they are drawn or composed artwork. (Collecting…